Endor Labs can detect AI models and list them as dependencies when you run a scan with the --ai-models flag. You can view the detected AI models in the AI Inventory section of the Endor Labs user interface.

You can define custom policies to flag the usage of specific AI providers, specific AI models, or models with low-quality scores so that their usage raises findings as part of your scan. Endor Labs provides AI model policy templates that you can use to create finding policies that are tailored to your organization’s needs. You can view these findings in Code Dependencies > AI Models on the Findings page.

Run the following command to detect AI models in your repository.

endorctl scan --ai-models

When you run a scan with the --ai-models option, Endor Labs downloads Opengrep and runs Opengrep to detect AI models.

Endor Labs detects AI models using pattern matching and can use LLM processing to improve detection accuracy. LLM processing is disabled by default.

See Supported AI model providers for the list of external AI models detected by Endor Labs. Only Hugging Face models are scored, as they are open source and provide extensive public metadata. Models from all other providers are detected but not scored due to limited metadata.

Enable LLM processing for AI model detection

To enable LLM processing in Endor Labs:

- Select Settings from the left sidebar.

- Select System settings > Data privacy.

- Turn on Code Segment Embeddings and LLM Processing.

See Configure system settings for more information.

To generate AI model findings:

-

Configure finding policy to detect AI models with low scores and enforce organizational restrictions on specific AI models or model providers.

-

To disable AI model discovery, set

ENDOR_SCAN_AI_MODELS=falsein your scan profile.

AI model detection

The following table lists the AI model providers currently supported by Endor Labs for model detection. For each provider, the table includes supported programming languages, if model scoring is available, and a reference link to the provider’s API documentation.

| AI model | Supported languages | Endor score | Reference |

|---|---|---|---|

| HuggingFace | Python | ✓ | https://huggingface.co/docs |

| OpenAI | Python, JavaScript, Java (beta), Go (beta), C# | ✗ | https://platform.openai.com/docs/libraries |

| Anthropic | Python, TypeScript, JavaScript, Java (alpha), Go (alpha) | ✗ | https://docs.anthropic.com/en/api/client-sdks |

| Python, JavaScript, TypeScript, Go | ✗ | https://ai.google.dev/gemini-api/docs/sdks | |

| AWS | Python, JavaScript, Java, Go, C#, PHP, Ruby | ✗ | https://docs.aws.amazon.com/bedrock/latest/APIReference/welcome.html#sdk |

| Perplexity | Python | ✗ | https://docs.perplexity.ai/api-reference/chat-completions-post |

| DeepSeek | Python, JavaScript, Go, PHP, Ruby | ✗ | https://api-docs.deepseek.com/api/deepseek-api |

| Azure OpenAI | C#, Go, Java, Python | ✗ | https://learn.microsoft.com/en-us/azure/ai-foundry/ |

AI model discovery through monitoring scans

By default, AI models are discovered during SCA scans run through GitHub App, Bitbucket App, Azure DevOps App, and GitLab App. You can view the reported AI models under AI Inventory in the left sidebar.

To disable AI model discovery, set ENDOR_SCAN_AI_MODELS=false as an additional environment variable in the scan profile and assign the scan profile to the project.

Detect AI models

Configure finding policies and perform an endorctl scan to detect AI models in your repositories and review the findings.

-

Configure finding policy to detect AI models with low scores and enforce organizational restrictions on specific AI models or model providers.

-

Run an endorctl scan with the following command.

endorctl scan --ai-models --dependencies

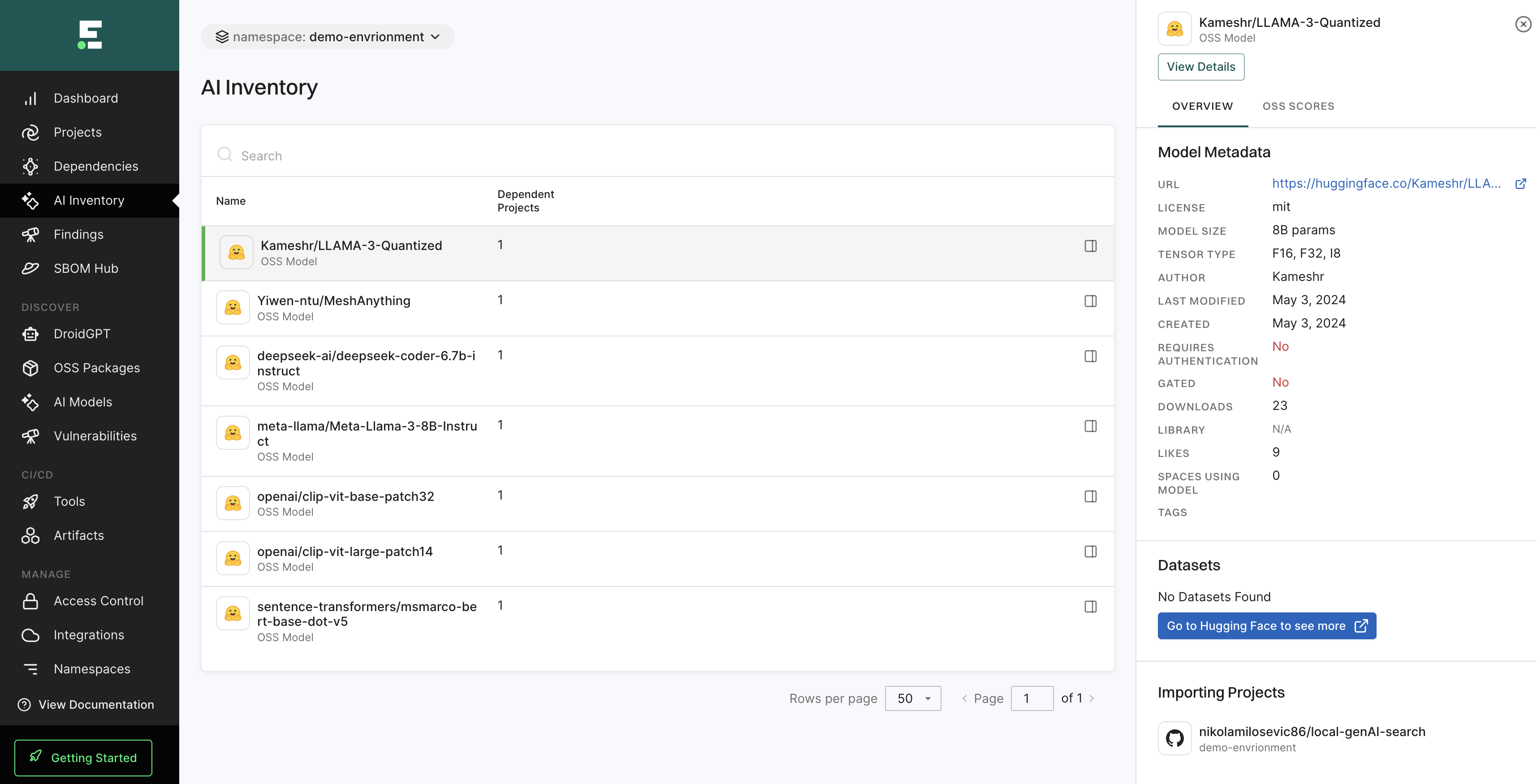

View AI models in your namespace

To view all AI models that are used in your namespace:

- Select AI Inventory on the left sidebar.

- Use the search bar to look for any specific models.

- Select a model, and click to see its details.

- You can also navigate to Findings and choose AI Models to view findings.

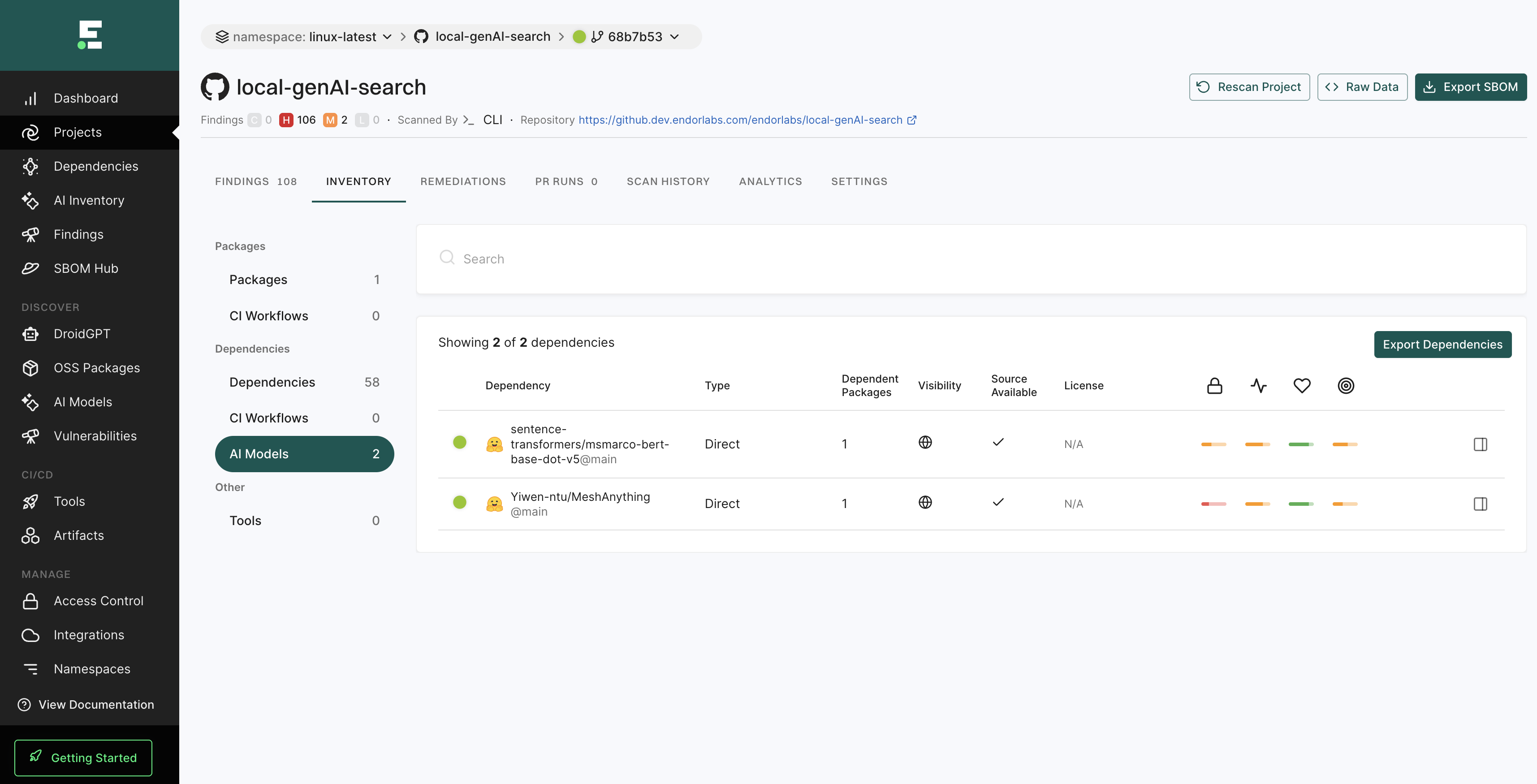

View AI models in a project

To view AI models that are used in a specific project:

- Select Projects from the left sidebar and select a project.

- Select Inventory and click AI Models under Dependencies to view findings.

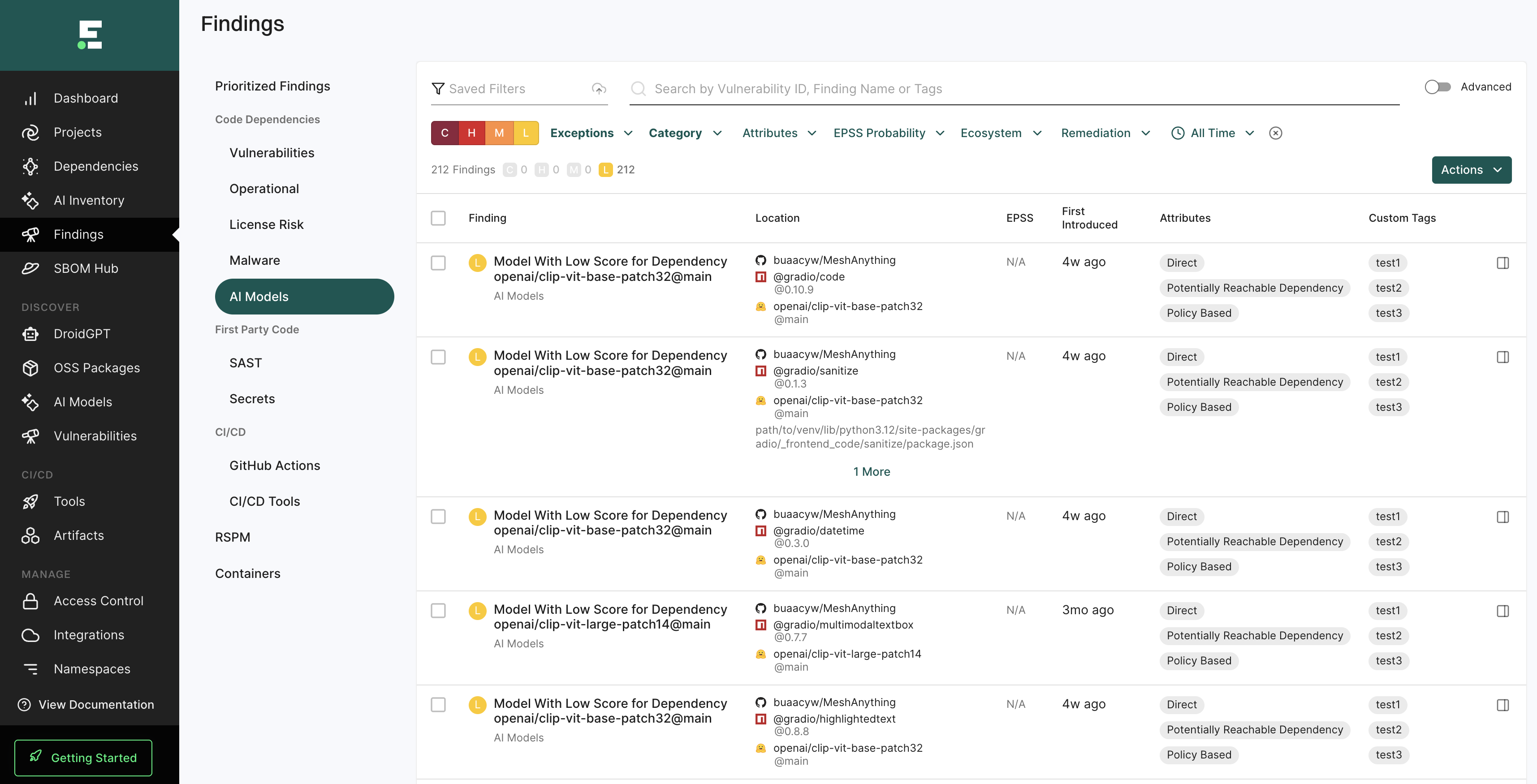

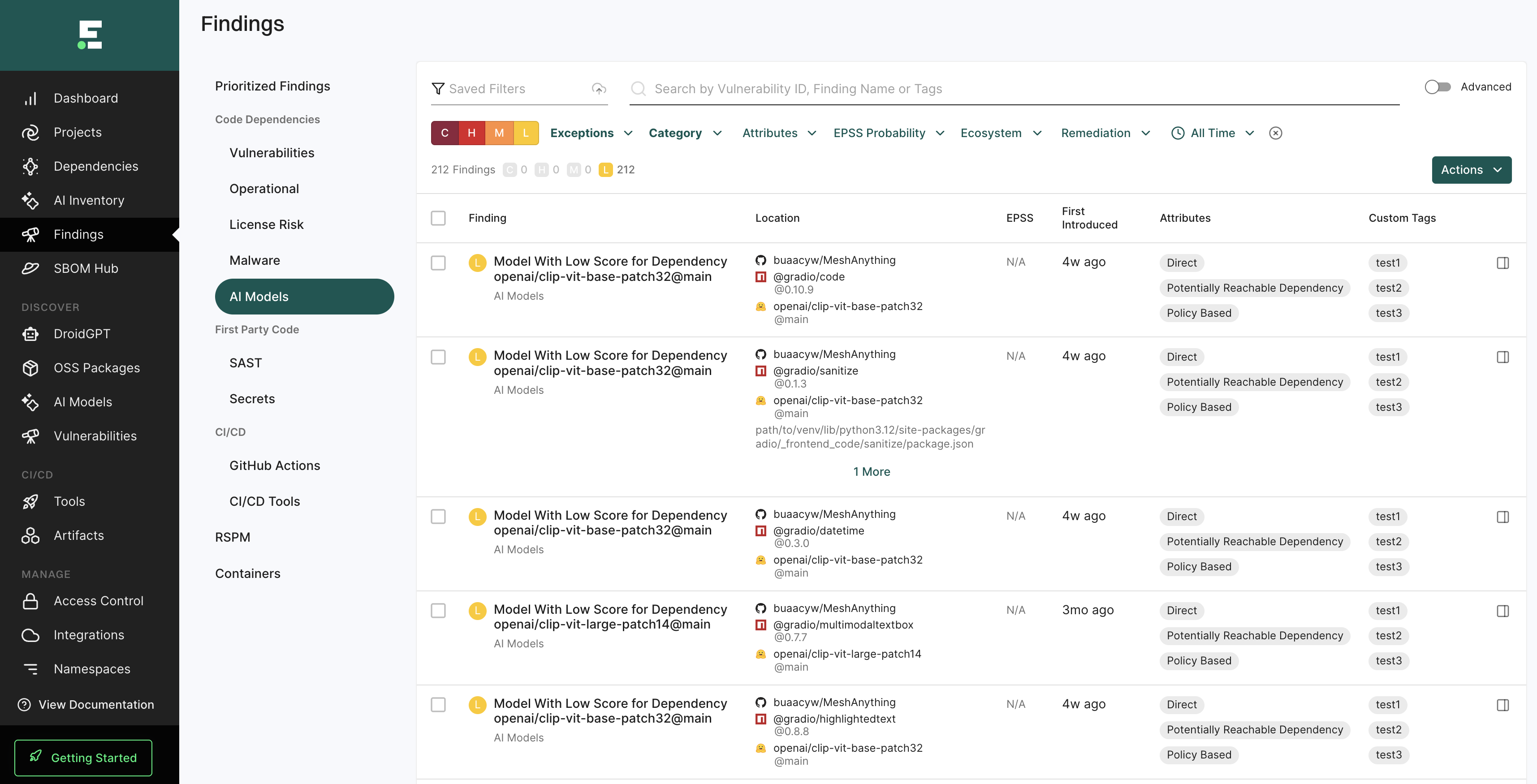

View AI model findings in your namespace

To view all AI model findings in your namespace:

- Select Findings from the left sidebar.

- Select AI Models from the Findings page.